9.1 Environment

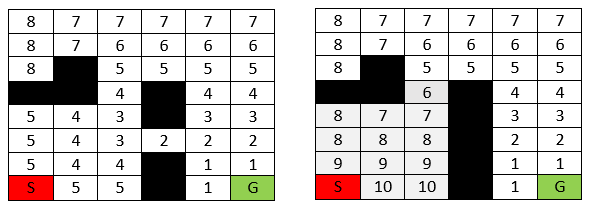

So far we have seen Greedy, Dijsktra, A*, Weighted A*, Backward A*, and ARA*. Each of these searches work only in static environments. But, in dynamic environments they won’t work because the $g-value$ of nodes will change with change in environment. In Figure 9.1, we can see that changes in $g-values$ when the door closes.

Figure 9.1 Change in environment

9.2 D* Lite and Anytime D*

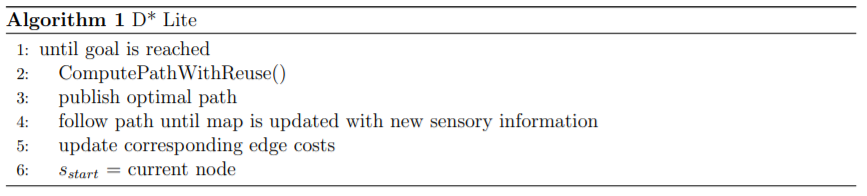

There’s a simple solution to this: update the $g-values$ using new sensory information. The robot finds a solution, executes it, and finds that the environment has changed; updates $g-values$ according to the environment, set current node to $s_{start}$ and replan. In simple terms, D* repairs the search locally i.e. based on the information from sensors.

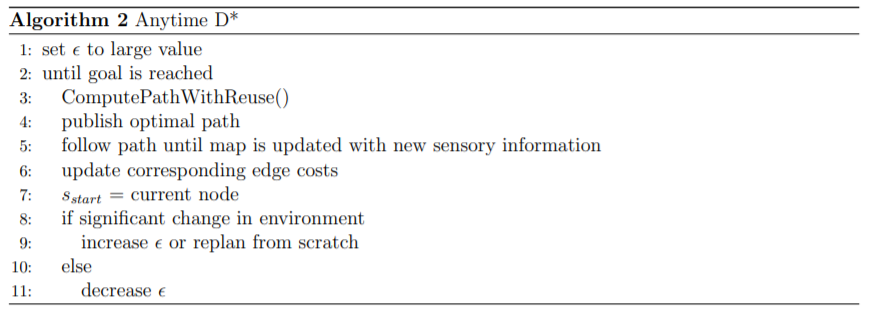

We can even incorporate anytime algorithm with D*. In algorithm 2, we can see that at every iteration; we get closer to optimality as well as the search is repaired using new sensory information.

Note: One must understand why the D* searches from goal to start. It is obvious that the start node can change whenever the environment changes. Therefore, the search begins from goal i.e $g-value$ is the total length from node $s$ to node $s_{goal}$.

</> GitHub

9.3 References

[1]. 16-350 Planning Techniques for Robotics - link

[2]. Choset H., Lynch K. M., Hutchinson S. Kantor G., Burgard W., Kavraki L. E., Thrun Sebastian. Principles of Robot Motion. MIT Press